- From decentralised DevOps teams to one-click deployments with Kubernetes and Terraform - DevOps at ABOUT YOU

INSIGHTS From decentralised DevOps teams to one-click deployments with Kubernetes and Terraform - DevOps at ABOUT YOU

When I started working for ABOUT YOU two years ago, there was an Operations team ("Ops" for short) and many small DevOps teams in the respective departments. Together, they formed the basis for running our systems. However, due to the rapid growth and high level of innovation at ABOUT YOU, this DevOps organisational pattern soon became less than optimal. Although the DevOps teams in the specialist departments were self-sufficient, there were no standardised solutions. Many problems were not solved optimally and often redundantly in the teams. On the one hand, this led to unnecessary, duplicated effort and, on the other, to an enormous increase in the complexity of the respective specialist areas. It became clear to us that we had to rethink our previous way of working when providing new functions on our platform for new customers.

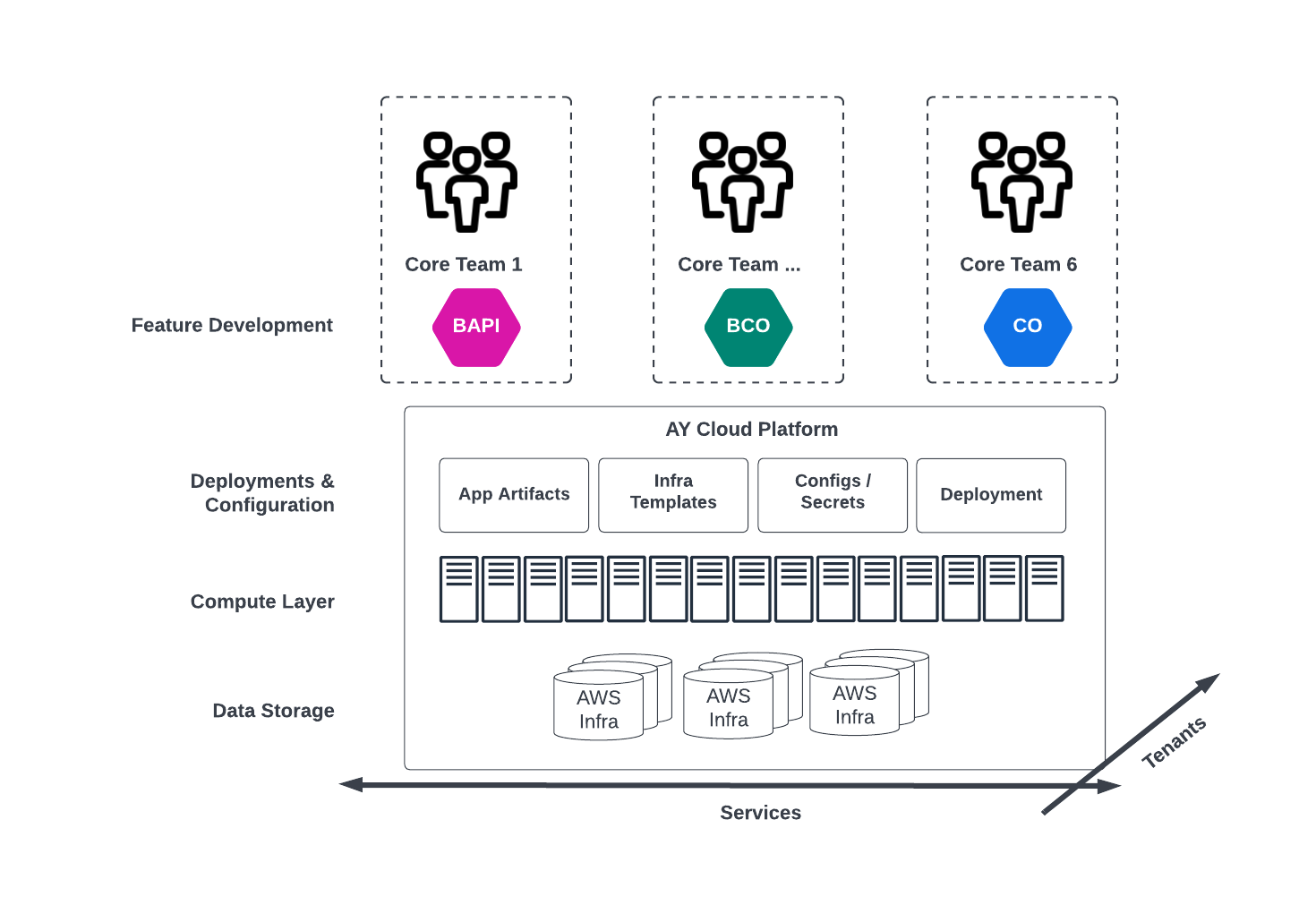

Some context: at ABOUT YOU, we operate not only aboutyou.com - one of Europe’s fastest-growing online fashion platforms of scale - but also our own e-commerce platform and solutions that we provide to large customers as a SaaS under the SCAYLE brand.

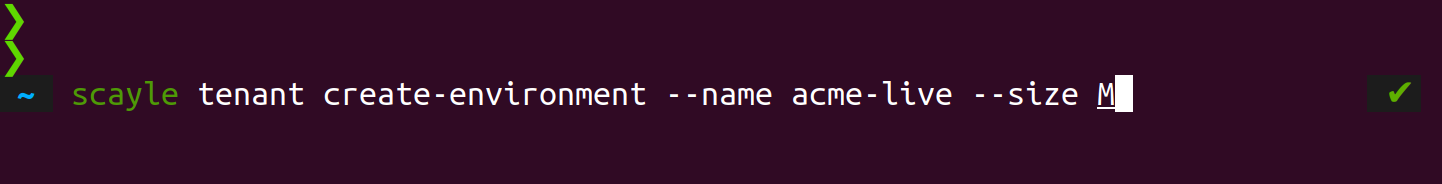

In particular, the provision of new environments (preview, test, live) for new, large customers has always been a headache for us. For a long time, we did far too much manually and involved too many product teams in setting up a new customer. As a result, it sometimes took us over nine weeks to set up a test system for a new customer. What we really needed was a "1-click tenant deployment" - to be able to set up the environment for a new customer automatically with one click.

With this idea in mind, I went back to the individual teams at the time and started to get an overview of all the necessary systems and configurations for setting up a new tenant. But that was easier said than done. All of a sudden, questions came up like: what do we mean by "tenant" exactly anyway? What does a tenant configuration actually include? In which systems and teams do tenants have to be set up today? Can we manage the entire tenant configuration in a single, central location?

Consolidating and centralising

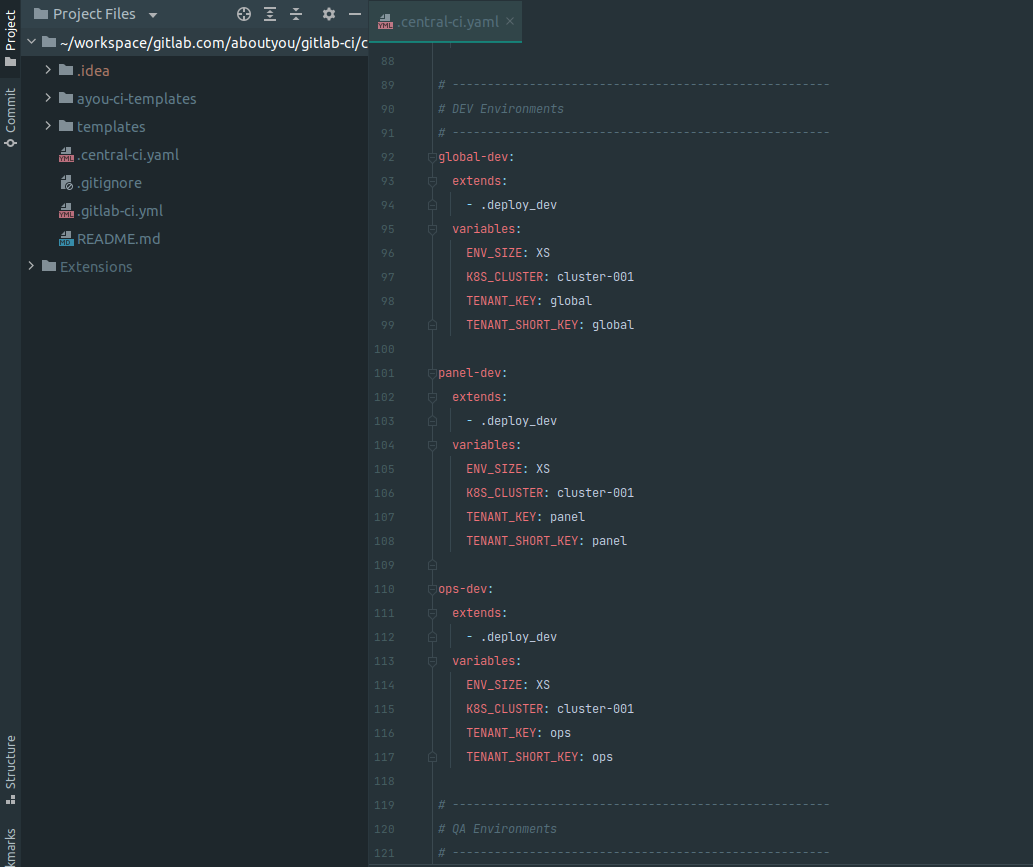

We quickly realised that we had to unify the widely branching tenant configuration in a central location. We formed a team with members from each product team and worked together until we had captured every tenant-dependent variable, no matter how hidden, and discussed it together: do we still need this variable? Does it really depend on the tenant? Can we possibly use a global tenant variable for the deployment of the system?

The result was a central yaml file per tenant with over 100 variables. Today, only four variables are needed to set up a new tenant - we can reconfigure the rest at any time.

Some of you might be wondering why we didn't build a more complex tenant configuration: it is simply because, so far, we don't need the added complexity. Just having the complete tenant configuration in a repository and thus being able to use it centrally in the Infrastructure-as-Code workflows is worth its weight in gold, and surprisingly pragmatic.

Clean tenant encapsulation with the benefits of an efficient multi-tenant solution through Kubernetes namespaces

We used to do a lot of work directly with AWS and EC2 instances that we managed and deployed with AWS Cloudformation. It was nice and pragmatic at first, but it quickly became clear that we needed to think about how we were going to deliver services in the future. We needed a clean separation of tenants to be able to run specific settings for individual customers and also to securely separate customer data. At the same time, it was also extremely important for us to use our hosting resources efficiently and realise economies of scale. After a few trials with EC2 and ECS, it quickly became clear that we would have had to build ourselves many of the topics for automation (e.g. secret handling, customer-specific domains and certificates) but also the use of shared resources to optimise efficiency, at great expense. We already knew many of these needed features from Kubernetes. Through Kubernetes and standardised Helm charts, we were able to provide our teams with a container-driven collaboration mode without having to reinvent the wheel.

Thus, the switch to Kubernetes was a done deal. By the way: we had underestimated the importance of separating the tenant configuration and the deployment. Here, it is really worth investing a little more time in advance so that not all services have to be rebuilt and deployed every time a small change is made to the tenant configuration. We work a lot here with ENV variables and the AWS Parameter Store.

| INFOBOX: YAGNI - You Ain't Gonna Need It |

|---|

| At ABOUT YOU, we build according to the YAGNI principle. That means we try to keep our solutions lean and only add functions or technology when we really need them. In the DevOps area, we have therefore long relied on pure EC2 instances instead of Kubernetes. The switch to Kubernetes only made sense when we expanded our multi-tenant architecture. |

Terraform and Helm instead of Cloudformation

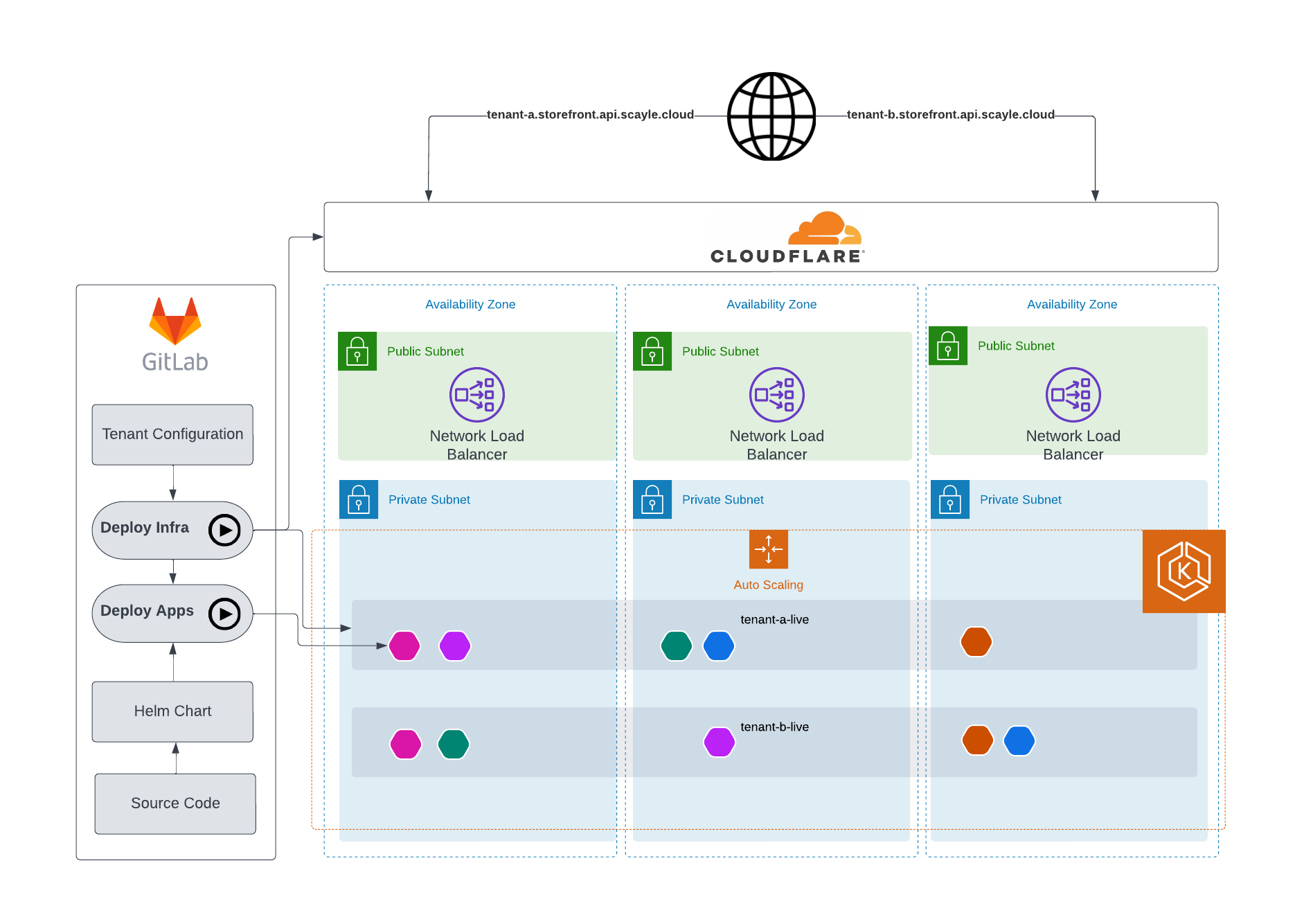

To really deploy all the necessary services for a tenant, we needed an Infrastructure as Code solution that also supported non-AWS providers such as Cloudflare, Datadog and Akamai. That's one of the reasons we chose Terraform - where all major hosting providers and services are supported out-of-the-box in addition to AWS.

After some testing, we started building central repositories with all Helm charts and Terraform modules and storing them in our Gitlab. By the way, we also use the build pipelines in Gitlab for conveniently running and testing our Terraform code.

In the meantime, we deploy all AWS services, Helm charts (for the containers in AWS EKS/Kubernetes) and Cloudflare, as well as Akamai with Terraform. To keep our Terraform code from becoming too complex with the many tenants and environments, we still use the handy tool "Terragrunt" before Terraform. Similar to common package managers like yarn or npm, you can use Terragrunt as a central command for different environments and keep your Terraform modules lean and modular.

| INFOBOX: Terragrunt |

|---|

| Terragrunt is a wrapper around the infrastructure-as-a-code solution Terraform. The background is that large Terraform projects with many environments quickly become confusing and therefore difficult to maintain. Terragrunt creates another level of abstraction in front of Terraform in order to structure complex projects in a meaningful way and to keep the actual Terraform scripts as lean as possible. https://terragrunt.gruntwork.io/ |

Just 1 click? Where we are today

We went live with our first Kubernetes test cluster in January 2021 with a first prototype of the new DevOps infrastructure. Two months later, we were already able to provide the first major customer with its environment with the new architecture - a super team achievement! In the meantime, we are gradually migrating all systems to the new platform. Our setup time for new customer environments has been reduced from up to nine weeks to just one day. We now spend much more time configuring tenant with the customer than we do deploying the infrastructure - it really is a one-click process. It's a great feeling and a solid basis for the growth of our platform.

Our learnings and next goals

What we underestimated at the beginning of our DevOps conversion and tenant unification was the organisational change in the team that was necessary for the conversion. Although we are usually organised very decentrally in product teams, we had to concentrate DevOps responsibility in a central platform team for the project to succeed. We were helped in this by the fact that the respective employees in the product teams were extremely motivated to automate the many manual processes for creating a new tenant in order to use the new infrastructure themselves as quickly as possible. This created a super workflow within the team and everyone was able to contribute their product view in the best possible way.

Our next goal is to have all new tenants migrated to the new architecture before Black Friday 2022. Since we sometimes have tenants with millions of requests per minute, this will still be an exciting challenge, but I am sure that we will master this with the new architecture. To optimise our Kubernetes deployments, we are currently considering using the Kubernetes operator pattern to ensure the stability of the entire application in an even more automated way. Overall, though, we are already very happy with the new architecture and our product teams can now get their cool changes live much faster. True to the motto: You build it. You run it. You own it.